CIL on the Cloud

In this blog, we will demonstrate how to use the Core Imaging Library (CIL), an open source software for imaging inverse problems, on cloud platforms like Binder and Google Colab. These platforms offer cloud-based environments with pre-installed packages and resources, making them highly suitable for teaching and training purposes. In addition, if you do not have a GPU or you are a macOS user without GPU or without the right GPU these cloud platforms serve as an excellent alternative. Or maybe you lost connection to your Windows/Linux remote machines and you really want to reconstruct a tomographic dataset.

Outline

- What is CIL?

- Cloud platforms: Google Colab and Binder

- Install CIL on Colab

- Tomography reconstruction using CIL

- CIL Demos on Colab

What is CIL?

The Core Imaging Library or CIL is an open-source Python framework for solving inverse problems in imaging with particular emphasis on tomographic imaging and reconstruction. It is supported by the Collaborative Computational Project in Tomographic Imaging (CCPi), a UK academic network, which unites expertise in the field of Computed Tomography (CT). Its aim is to provide the community with software to increase the quality and level of information that can be extracted by CT, with an emphasis in software sustainability, maintainability and distribution.

To learn more about CIL, please check the links below.

- Github Repositories

- Software Papers

- Talks and Posters

To join the CIL community, you can register at our Discord channel.

Cloud platforms

In addition to the local installation available via conda, see here, we have two alternatives to use CIL on the cloud: 1) Binder and 2) Google Colab.

-

Binder: The Binder Project offers an easy place to create sharable, interactive, reproducible computing environments. Using the mybinder.org platform we transform a github repository into a collection of interactive notebooks without any installation. So, you are one click away from our CIL demos:

-

Google Colab: Google Colaboratory, or “Colab” is a cloud-based Jupyter notebook environment. It runs on Google Cloud Platform (GCP), and provides free access to GPUs and TPUs with many pre-installed scientific libraries.

Binder or Colab

In my opinion, the easiest choice to check some interactive jupyter demos and see the interface of an open source software, is Binder. However, in this case only CPU is available which is not the best option for tomography imaging. Also, you have limited memory and space if you want to upload some tomography datasets. A couple years ago, Binder was quite fast when building your image and if the image was recently built you could see the interactive demos in less than one minute. Nowdays, Binderhub servers have a lot of activity with many users and requesting a server can take a lot of time and sometimes you will receive a message such as : Found built image, launching… Too many users on this BinderHub! Try again soon..

In the case of Colab, we have the option of a free GPU up to 16Gb, which is very useful for tomography imaging and reconstruction. Also, you can upload some datasets to your personal google drive. However, CIL or other packages are not pre-installed in you jupyter environment. Therefore, we need to use pip or conda to install our software. Pip is the default option to install python libraries on Google Colab, e.g., !pip install name_of_package. Unfortunately, Conda is not available by default and we need to install it first and then use it, e.g., !conda install name_of_package.

Please note that Colab has recently upgraded its default runtime to Python version 3.10.

The next version of Python (3.11) is scheduled to have its final regular bug fix release in April 2024. Previous version (Python 3.9) is available from the Command Palette (Ctrl+Shift+P) via the Use fallback runtime version command when connected to a runtime. This will be available until mid-May 2023. See here for more information.

Install CIL on Colab

In this section, we discuss how to install CIL and all its dependencies on Google Colab required for this notebook. In CIL, we provide wrappers for two tomography backends: 1) Astra-Toolbox and 2) TIGRE. In addition, we are going to use the CCPi-Regularisation-Toolkit which provides a set of 2D/3D regularisation methods with CPU/GPU acceleration. We follow the installation steps below:

1) Check python version on your current jupyter session. It should be Python >=3.10.

2) Install TIGRE tomography backend following similar steps to Python installation.

3) Install the condacolab package. This is the easiest way to install install conda on google colab.

4) Import condacolab and install conda.

5) Install CIL with the Astra-Toolbox backend and the CCPi-Regularisation Toolkit using conda.

You can try this notebook directly on Google Colab.

Check Python version

!python --version

Python 3.10.11

Install TIGRE

!git clone https://github.com/CERN/TIGRE.git

%cd TIGRE/Python

!python setup.py install

!python example.py

Install CondaColab

!pip install -q condacolab

import condacolab

Install Conda

Note: Please wait for the kernel to restart.

condacolab.install()

Install CIL

Using the command below we install CIL, Astra-Toolbox and the CCPi-Regularisation toolkit via conda or mamba. We are going to use Mamba, a fast cross-platform package manager from Quantstack.

!mamba install -c conda-forge -c intel -c ccpi cil=23.0.1 astra-toolbox ccpi-regulariser "ipywidgets<8" --quiet

!cp -r /usr/local/lib/python3.10/site-packages/astra_toolbox-2.0.0-py3.10-linux-x86_64.egg/astra /usr/local/lib/python3.10/site-packages/

Check CIL version

import cil.version

print(cil.version.version)

23.0.1

Tomography reconstruction using CIL

We are now ready to use CIL to perform tomography reconstruction on a real tomography dataset.

Limited Angle Tomography Reconstruction

We are going to use a three-dimensional parallel-beam X-ray CT real dataset from Beamline I13-2, Diamond Light Source, Harwell, UK.

The sample consisted of a 0.5 mm aluminium cylinder with a piece of steel wire embedded in a small drilled hole. A droplet of salt water was placed on top, causing corrosion to form hydrogen bubbles. The dataset, which was part of a fast time-lapse experiment, consists of 91 projections over 180, originally acquired as size 2560-by-2160 pixels, and it is downsampled to 160-by-135 pixels see here.

We reconstruct this dataset using analytic and iterative reconstruction methods with a limited number of projections:

-

the Filtered Back Projection (FBP) algorithm,

-

Total variation (TV) regularisation under a non-negativity constraint

\(\begin{equation} \underset{u}{\operatorname{argmin}} \frac{1}{2} \| A u - d\|^{2}_{2} + \alpha\,\mathrm{TV}(u) + \mathbb{I}_{\{u\geq0\}}(u) \end{equation}\)

where, \(d\) is the noisy sinogram and \(A\) is the Projection operator.

Import libraries

from cil.framework import AcquisitionGeometry

from cil.processors import TransmissionAbsorptionConverter, Slicer, CentreOfRotationCorrector

from cil.optimisation.functions import L2NormSquared

from cil.optimisation.algorithms import PDHG

from cil.utilities.display import show2D, show_geometry

from cil.utilities import dataexample

from cil.utilities.jupyter import islicer

from cil.plugins.ccpi_regularisation.functions import FGP_TV

import matplotlib.pyplot as plt

import numpy as np

Import tomography backends: ASTRA and TIGRE

# Astra Backend

from cil.plugins.astra import ProjectionOperator as AstraProjectionOperator

from cil.plugins.astra import FBP as AstraFBP

# TIGRE backend

from cil.plugins.tigre import ProjectionOperator as TigreProjectionOperator

from cil.plugins.tigre import FBP as TigreFBP

Load and examine dataset

# data_raw = dataexample.SYNCHROTRON_PARALLEL_BEAM_DATA.get() # local installation

data_raw = dataexample.SYNCHROTRON_PARALLEL_BEAM_DATA.get(data_dir = "/usr/local/share/cil") # google colab

Preprocess Acquisition Data

In the code above, we preprocess our acquired data:

- Convert to Absorption using the Beer-Lambert law.

- Correct for centre of rotation artifacts.

- Simulate a limited angled tomography dataset. Our raw data has 91 projections, but we are going to use only 15 of them.

background = data_raw.get_slice(vertical=20).mean()

data_raw /= background

# Lambert-Beer law

data_abs = TransmissionAbsorptionConverter()(data_raw)

data_crop = Slicer(roi={'vertical': (1, None)})(data_abs)

# Reorder the shape of sinogram only if astra backend is used

data_crop.reorder(order='astra')

# Correct centre of rotation artifacts

data_centred = CentreOfRotationCorrector.xcorrelation()(data_crop)

# Reduce number of projections

data_sliced = Slicer(roi={'angle': (0, 90, 6), 'horizontal': (20,140,1)})(data_centred)

# Get acquisition geometry

ag = data_sliced.geometry

# Get image geometry

ig = ag.get_ImageGeometry()

Show Acquisition Geometry

show_geometry(ag)

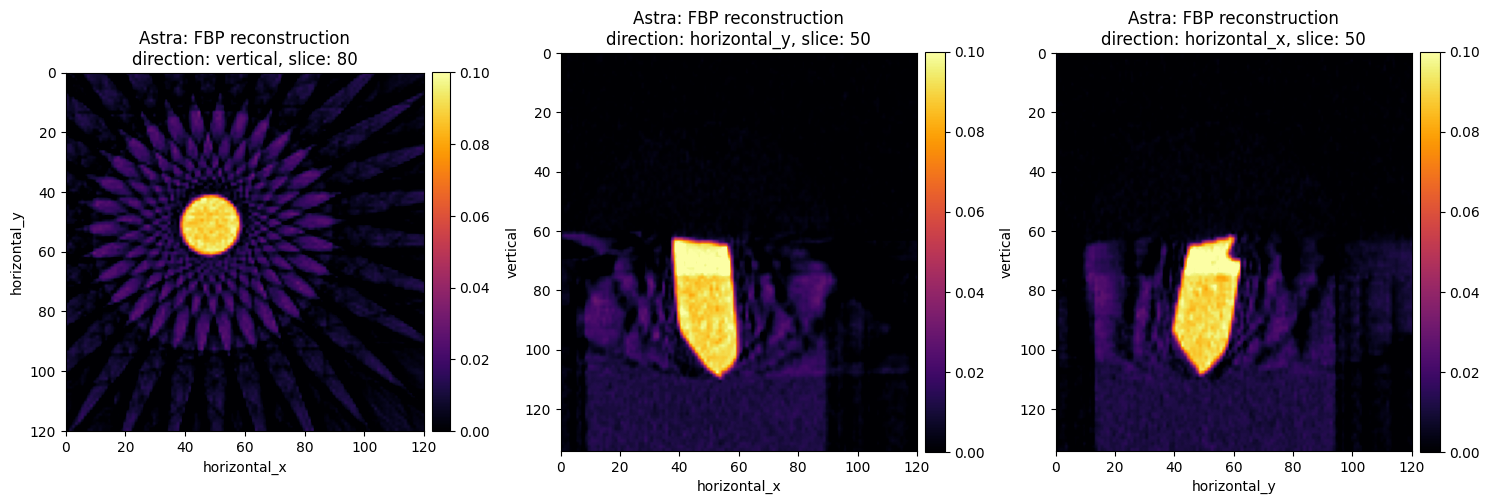

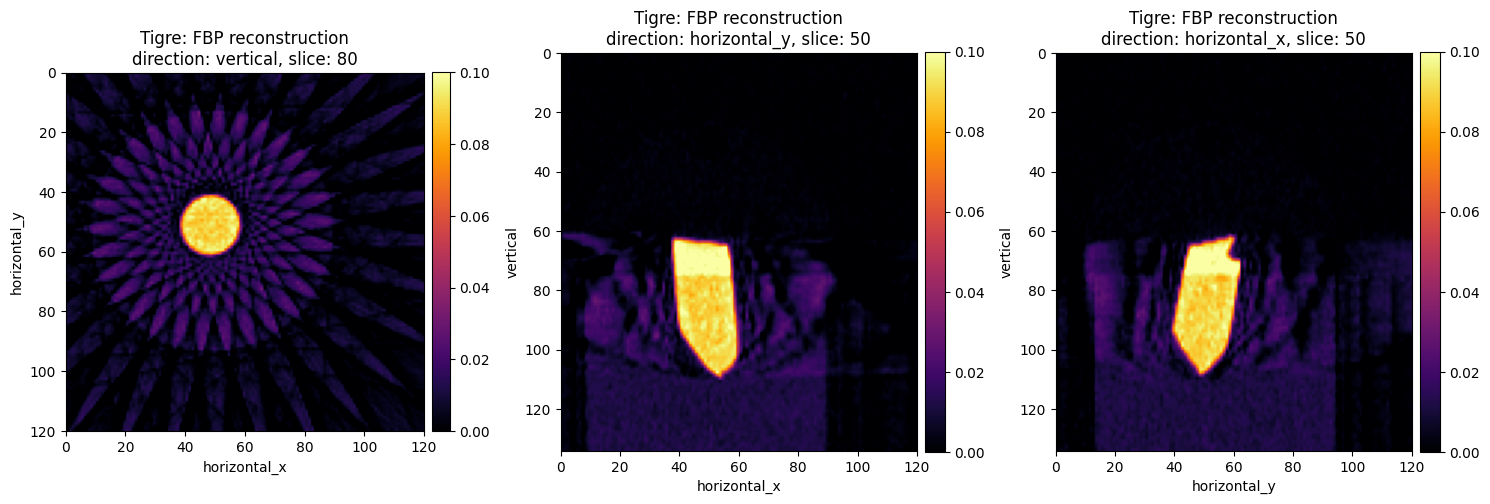

Run analytic reconstruction: FBP using Astra and Tigre

data_sliced.reorder('tigre')

fbp_recon_tigre = TigreFBP(ig, ag, device='gpu')(data_sliced)

data_sliced.reorder('astra')

fbp_recon_astra = AstraFBP(ig, ag, device='gpu')(data_sliced)

# visualise reconstruction results

show2D(fbp_recon_astra, slice_list=[('vertical',80),

('horizontal_y',50),

('horizontal_x',50)], \

cmap="inferno", num_cols=3, size=(15,15), title="Astra: FBP reconstruction",

fix_range = (0,0.1),

origin='upper-left')

show2D(fbp_recon_tigre, slice_list=[('vertical',80),

('horizontal_y',50),

('horizontal_x',50)], \

cmap="inferno", num_cols=3, size=(15,15), title="Tigre: FBP reconstruction",

fix_range = (0,0.1),

origin='upper-left')

Run Model-Based iterative reconstruction: Total variation reconstruction

The images on the first column are perfect if you are interested to very cool and futuristic star-shaped art. If we examine other slices they are far from perfect for tomography reconstruction. These artifacts are well known when we use analytic reconstruction algoritms on data with a limited number of projections, see Core Imaging Library–Part I: a versatile Python framework for tomographic imaging.

To reduce these artifacts, we are going to use Model-Based Iterative Reconstruction (MBIR) algorithms. We use the Primal-Dual Hybrid Gradient (PDHG) with Total Variation (TV) regularisation under a non-negativity constraint.

The PDHG algorithm solves the following problem:

\(\begin{equation} \underset{x\in \mathbb{X} }{\operatorname{argmin}} f(Kx) + g(x) \tag{2} \end{equation}\)

which is the sum of a composite function \(f\) with a linear operator \(K\) and a proximable function \(g\).

So, in order to use the PDHG algorithm in CIL, we need to find the triplet $(f, g, K)$ in order to express the minimisation problem (1) to the general PDHG form (2).

-

In our case, we have \(K = A\) , i.e., our Astra projection operator

A = AstraProjectionOperator(ig, ag, device="gpu")Note: cpu option is not available for the TIGRE backend -

The first term in (1), also called fidelity term, is \(f(u) = \frac{1}{2}\|Au - d\|^{2}\) and in CIL syntax, is

F = LeastSquares(A=A, b=data_centred, c=0.5) -

The second term in (1) is the TV regulariser with a non-negativity constraint and in CIL syntax is

G = alpha * FGP_TV(nonnegativity=True, device = "gpu"), wherealphais the regularisation parameter.

Now, it is time to run the PDHG algorithm, for 100 iterations. We can initialise our algorithm from an array of zeros, ig.allocate() or the previous FBP reconstruction. We also print the objective of the primal problem every 10 iterations using verbose=1

# Projection Operator

A = AstraProjectionOperator(ig, ag, device="gpu")

# Fidelity term

F = 0.5*L2NormSquared(b=data_sliced)

# TV regularization

alpha = 0.2

G = alpha * FGP_TV(nonnegativity=True, device = "gpu")

# setup and run PDHG algorithm

pdhg = PDHG(initial=fbp_recon_astra, f=F, g=G, operator=A,

update_objective_interval=10,max_iteration=100)

pdhg.run(verbose=1)

Iter Max Iter Time/Iter Objective

[s]

0 100 0.000 3.81659e+03

10 100 0.176 1.78921e+02

20 100 0.172 1.60171e+02

30 100 0.172 1.56264e+02

40 100 0.175 1.55244e+02

50 100 0.175 1.54886e+02

60 100 0.176 1.54706e+02

70 100 0.175 1.54649e+02

80 100 0.176 1.54622e+02

90 100 0.176 1.54605e+02

100 100 0.177 1.54597e+02

-------------------------------------------------------

100 100 0.177 1.54597e+02

Stop criterion has been reached.

show2D(pdhg.solution, slice_list=[('vertical',80),

('horizontal_y',50),

('horizontal_x',50)], \

cmap="inferno", num_cols=3,title="TV: alpha = {}".format(alpha),

fix_range=(0,0.1), origin='upper')

Discussion

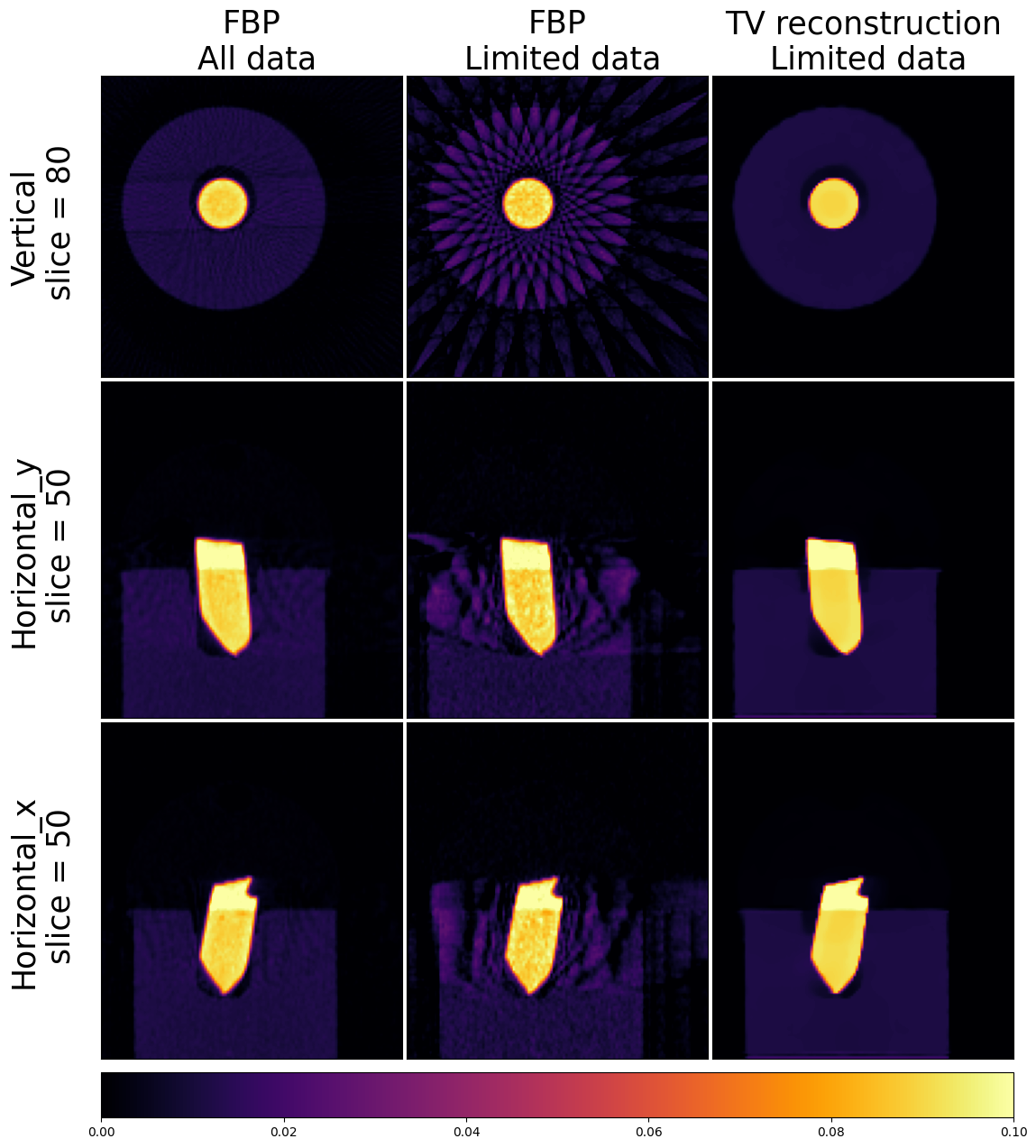

Using the iterative reconstruction approach, we successfully eliminated the noise and streak artifacts from our initial analytic reconstruction. However, in order to determine whether the resulting reconstruction is good or bad requires further evaluation and assessment. For this particular dataset, we can perform the following comparison. We can reconstruct our dataset using all 91 projections with the FBP algorithm and compare it with our TV reconstructed image.

data_full = Slicer(roi={'horizontal': (20,140,1)})(data_centred)

ag_full = data_full.geometry

ig_full = ag_full.get_ImageGeometry()

fbp_full_astra = AstraFBP(ig_full, ag_full, device='gpu')(data_full)

all_recons = [fbp_full_astra.get_slice(vertical=80), fbp_recon_astra.get_slice(vertical=80), pdhg.solution.get_slice(vertical=80),

fbp_full_astra.get_slice(horizontal_y=50), fbp_recon_astra.get_slice(horizontal_y=50), pdhg.solution.get_slice(horizontal_y=50),

fbp_full_astra.get_slice(horizontal_x=50), fbp_recon_astra.get_slice(horizontal_x=50), pdhg.solution.get_slice(horizontal_x=50)]

from mpl_toolkits.axes_grid1 import AxesGrid

from mpl_toolkits.axes_grid1.anchored_artists import AnchoredSizeBar

labels_x = ["FBP\n All data", "FBP\n Limited data","TV reconstruction\n Limited data"]

labels_y = ["Vertical\n slice = 80", "Horizontal_y\n slice = 50", "Horizontal_x\n slice = 50"]

fig = plt.figure(figsize=(15, 15))

nrows, ncols = 3, 3

grid = AxesGrid(fig, 111,

nrows_ncols=(nrows, ncols),

axes_pad=0.05,

cbar_mode='single',

cbar_location='bottom',

cbar_size = 0.5,

cbar_pad=0.1

)

k = 0

for ax in grid:

im = ax.imshow(all_recons[k].array, vmin=0, vmax=0.1, cmap="inferno")

if k in [0, 1, 2]:

ax.set_title(labels_x[k],fontsize=25)

if k in [0, 3, 6]:

ax.set_ylabel(labels_y[np.divmod(k,nrows)[0]],fontsize=25, labelpad=20)

ax.set_xticks([])

ax.set_yticks([])

k+=1

cbar = ax.cax.colorbar(im)

plt.show()

More CIL-Demos

To try more demos on Google Colab, please visit our CIL-Demos repository and load one of our notebooks to the Google Colab platform and repeat the installation steps above. Please note that for some notebooks you need to download first the dataset required to run the notebook.